ANADP11 was a conference about (inter)national alignment – and organizing a conference around such a theme implies that we need more of it. I offer you my tentative conclusions, a fortnight after the conference:

ANADP11 was a conference about (inter)national alignment – and organizing a conference around such a theme implies that we need more of it. I offer you my tentative conclusions, a fortnight after the conference:

Sometimes aligning is as easy as hopping on a plane and attending a conference in Tallinn. Nothing tops meeting people face to face to exchange ideas and information. Much of the (technical) information can, of course, be found on the internet, but in our day-to-day lives we rarely have or make the time to actually find and read it all. Also: having an actual conversation about something is much more informative than one-way traffic. This type of alignment really requires no more than a bunch of enthusiastic people who are willing to put in a lot of time into putting conferences such as these together (thanks! Matt Schultz, Katherine Skinner, Martin Halbert, Aaron Trehub, Abigail Potter, Martha Anderson, Michelle Galinger and, last but not least, Mari Kannusaar of the Estonian National Library): the Tallinn conversation was an important type of alignment in and of itself in the way it was organized around themes, with panels discussing the issues before the conference.

Alignment networks become a little more formal when organizations actually start doing projects together: the Open Planets Foundation, the Alliance for Permanent Access, the US National Digital Information Infrastructure and Preservation Program (NDIIPP), the national digital preservation coalitions (nestor, DPC, NCDD). These are foundations with by-laws. Typically, they will run outreach and R&D programs together. A more informal, but very successful type of network is the International Internet Preservation Consortium (IIPC). It is fluid, it is informal. One wonders whether these need more international alignment than they already have. Perhaps more of these groupings are called for, especially in countries that have not yet embraced the issue of digital preservation, but if so, then these must really be bottom-up initiatives.

The organizational panel’s breakout session pleaded for fluid, informal international alignment rather than an international steering committee, as proposed by Laura Campbell in her keynote address.

Alignment of necessity becomes more formal when organizations start sharing the burden of digital preservation: LOCKSS, the MetaArchive, etc. Taking care of each others’ collections requires governance contracts to be drawn up. However, one wonders if these types of initiatives are in need of more or further international alignment; they seem to work best when similar organizations (similar as in: close together, with similar remits, of similar size, within the same scientific discipline) group together to do a very practical job. I thought the Alabama Digital Preservation Network (blog post) was a particularly powerful example which can inspire others. We definitely need to continue to organize conferences to highlight such initiatives for others to learn (and enable our staff to come to these conferences despite budget cuts …), but whether they need more (international) alignment as such … I wonder.

Sharing the burden of preservation requires more robust support, but often at a local and/or disciplinary level (St. Catherine’s passage, Tallinn)

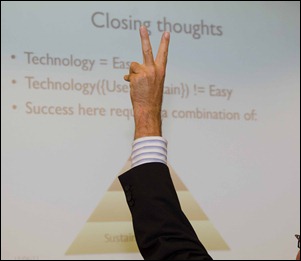

Technical development, then, and standards. Are we reinventing the wheel over and over again? Will alignment help us save money? Yes and no. Of course we would save a lot of money in the short run if we were all to adopt the same technology. However, digital preservation is a moving target. Michael Seadle and Andreas Rauber assured us that all our present systems are untested; they are, indeed, “a leap of faith”. So this is no time to “rush into standards” (Bram van der Werf). In other words: exchanging experiences, exchanging test data: yes; throwing all of our eggs into one basket at this (early) point in time: definitely not. We need to allow for diversity in preservation strategies and tools to develop. This will, of course, lead to some redundancy, but that is all in the game.

So far, we have made a strong case for more conferences, more conversations, more debates, more projects, more comparing of notes, more sharing of experiences (test data, best and, yes please, worst practices), the establishment of fluid, informal affinity groups to share knowledge. Plus more formal alignment on a local or national scale when organizations actually start collaborating in preserving their digital collections. But we have not yet made the case for more international alignment. Unless … one thinks about …

An international digital preservation registry?

During the conference the call was heard for some type of international registry of projects, initiatives, knowledge, competences, etc. I have heard that call before, and it would be great to have such a facility? A one-stop portal where everything that we know about what is being preserved by whom, everything we are studying (including everything we have found out that does not work), and perhaps even more importantly, everybody involved in DP and their special expertise, is linked up and a brokering services brings supply and demand together. Not a project, but a sustained effort by the international community.

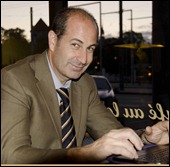

The trouble with that is that we had something like that, the PADI service, run by the Australian National Library. But, as Maurizio Lunghi (photo left) explained at the conference, that service was discontinued last year. The website is still up, but it is not being maintained any more. PADI went down, Lunghi told us, because it started out as an international effort, but in the course of time it became isolated, and the Australian National Library was doing all the work alone. PADI became too isolated. And that is the trouble with this type of registry/competence center. Everybody wants it, but establishing and maintaining one is very labour-intensive. Especially in difficult economic times such as the present, our institutions’ management will not prioritise active knowledge sharing. Which is understandable in the short term, but obviously unwise in the long term. I have been involved in organizing something like this at a national scale, and apart from the staff effort involved, it is also very difficult to make all that knowledge and experience available in a form that is useful to users – simply because there are so many users with so many different needs.

The trouble with that is that we had something like that, the PADI service, run by the Australian National Library. But, as Maurizio Lunghi (photo left) explained at the conference, that service was discontinued last year. The website is still up, but it is not being maintained any more. PADI went down, Lunghi told us, because it started out as an international effort, but in the course of time it became isolated, and the Australian National Library was doing all the work alone. PADI became too isolated. And that is the trouble with this type of registry/competence center. Everybody wants it, but establishing and maintaining one is very labour-intensive. Especially in difficult economic times such as the present, our institutions’ management will not prioritise active knowledge sharing. Which is understandable in the short term, but obviously unwise in the long term. I have been involved in organizing something like this at a national scale, and apart from the staff effort involved, it is also very difficult to make all that knowledge and experience available in a form that is useful to users – simply because there are so many users with so many different needs.

But wouldn’t it be great … I am not giving up the idea and I welcome anybody to think this through with me!

The Digital Elephants in the Room

So much for the problems that we did discuss. Now for the ones we did not discuss (enough). Clifford Lynch called them the Elephants in the Room, the issues we chose not to talk about because … yes, well, why did not we discuss them? I have a couple of theories. For one, the conference room was dominated by librarians – there were very few people from the archives, museums, or from scientific repositories. That was a shame, because it limits the scope of issues to be discussed. Particularly, those issues that transcend traditional borders between domains and sectors were not talked about nearly enough. And I would argue that these are the very issues where we absolutely need international alignment because there is no other way to deal with them. Here is my list of these Key Issues (which I will be happy to develop further with anybody who wants to join the debate):

- Lots and lots of digital content is being produced at the moment that no heritage institution is collecting, because it does not fall within traditional collection profiles. It is high time we get together at a high policy level, with representatives from a broad range of organizations (museums, libraries, archives, scientific institutions) to talk about this huge challenge. This involves talking about data deluges (in science, but also in social media; audiovisual output for which there is no Public Records Act and no Legal Deposit Scheme), it definitely involves talking about selection (what to keep?), and who is going to do the keeping (distribute the work? establish new organizations?)

- Making the case of digital preservation towards funders and the public at large. This is a big one. It may force us to take another look at the assumptions underlying our work before we can get this right. Which is not something people particularly like doing. But we’ve got to do it, make sure that we talk with one voice. Can we write the success cases to prove our point?

- Where do we put our money? Both in Europe and in the US substantial amounts of money are invested in digital preservation research. But is R&D attacking the right problems? Where should we put our money to really start bringing costs of digital preservation down?

- Should effective copyright action be in this list? Clifford Lynch suggested that we get some smart people from both sides of the ocean together to really get to the bottom of the issue and organize an effective lobby to truly bring about a fundamental change in thinking about the individual (producer’s) rights versus public rights.

- Dare we think of an international registry that will really allow the international community for reinventing wheels only where alternatives really are beneficial?

At the end of the day I think I can say that no, we did not get everything right at this first ANADP conference. But we made a good start at filtering out the issues and deciding where they should be addressed and by whom. That in itself is important. There is talk of a follow-up workshop at iPRES and there is talk of a follow-up ANADP. Let’s try to include more parties there (more countries, more domains), let’s try to bring more decision makers to the debate, and let’s try to narrow the issues down to those areas where alignment is truly essential.

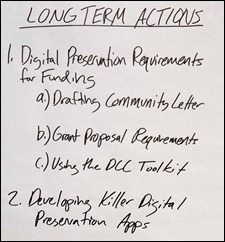

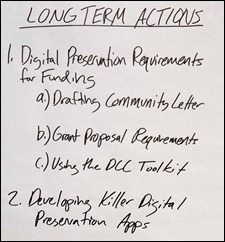

My favorite conference recommendation, by Jeremy York of HathiTrust: ‘Developing killer digital preservation apps’

This is the last of a series of 11 blog posts about the Tallinn conference. The others were:

-

-

-

-

-

-

-

-

-

-

Martin Halbert (left) and Matt Schultz of Educopia, two of the driving forces behind this initiative to open up a transcontinental policy debate.

Many thanks to Mari Kannusaar of the Estonian National Library (right), thanks to her colleague Leila and all the other colleagues for a smooth conference!

This is the last of a series of 11 blog posts about the Tallinn conference. The others were:

-

-

-

-

-

-

-

-

-

-

Yours truly will spare no effort to tell you everything; here is my paparazzi shot at the VIP room: LIBER President Paul Ayris (left) and Executive Director Wouter Schallier.

Yours truly will spare no effort to tell you everything; here is my paparazzi shot at the VIP room: LIBER President Paul Ayris (left) and Executive Director Wouter Schallier.