Inge Angevaare's blog over duurzame toegang tot digitale informatie in Nederland en daarbuiten

Blog of the coordinator of the Dutch Digital Preservation Coalition NCDD

zaterdag 17 december 2011

This blog has moved / Deze blog is verplaatst

or directly to the weblog, new url: http://www.ncdd.nl/blog/

Per 15 december 2011 is deze blog met archief en al verplaatst naar de NCDD website, nieuwe url: http://www.ncdd.nl

of direct near het weblog, nieuwe url: http://www.ncdd.nl/blog/

donderdag 8 december 2011

DISH2011 wrap up: the digital shift and us (DISH 4)

‘In many ways, digital surrogates are more useful, more accessible and more robust than physical objects. That is deeply upsetting for people who have dedicated their entire lives to collecting and maintaining physical objects.’

‘There are many more opportunities now for users to engage and to participate. Sometimes user impact is quite trivial, but it can also be very profound. For a lot of content, there is somebody out there who knows much more about it than we do and he is able to get in touch with us. Just think of the vast volumes of audiovisual content from our living memory. But user generated content does raise issues of trust: to what extent will we, memory organizations, be able or willing to vouch for this content?’ And there is more, ‘These participants may want to contribute more than just tags, they may bring us their own archives, expecting that there should be a place for the memories of all of us.’

So the question becomes: are we adapting to this new environment? I attended a workshop session on 'national infrastructures' and heard Marco de Niet of the DEN Foundation say: 'We should have done this ten years ago.' He was commenting on Dutch plans to use the Europeana structure and tools to aggregate content from a variety of Dutch institutions on one discovery platform. They call it the 'Netherlands Cultural Heritage Collection' - but really, it is metadata only and, if we are lucky, we will get some thumb nails. A workshop attendee asked the critical question: "Will our users be satisfied with just metadata?" Joyce Ray of the US IMLS figured that no-one would be able to find the money to aggregate the content as well.

But should such practicalities stop us from making bold moves?

In order to give us a sense that all of this is doable, the conference organizers had contracted strategist Michael Edson of the US Smithsonian Institution to give us a final pep talk the American way. His advice: stop thinking and talking in terms of ‘the future’. The pace of innovation is so quick now that we simply cannot spend months or even years talking about strategy. Because if we do, we will fail to recognize the things about digital culture that we can bank on now. In other words: ‘It is all a matter of going boldly into the present.’ Strategy should do work. It is a tool. (The text of his entire speech is on slideshare (edsonm).

|

| Michael Edson |

This is what Edson offered to take with us into the office this Monday morning:

I would say: good luck to all of us!

Grabbing digital preservation by the roots - #DISH2011, 3

|

| Karin van der Heiden (right) with Job Meihuizen of Premsela. |

If you think that this is perhaps too basic a level, just remember this: more and more digital content is being produced outside the sphere of influence of heritage institutions. Can you see the boxes of junk coming your way in 10 or 20 years' time and the troubles and expense they will cause? Educating everybody is therefore important to all of us. Karin's mission is to make basic preservation measures doable, enable designers, artists, researchers and everybody else to easily integrate basic measures into their workflow:

Great stuff. I'll let you know when the US edition becomes available.

Playing the 'digital lifecycle game' #DISH2011, 2

|

| Rony Vissers (Packed, Belgium) searching for answers |

|

| Shawn Day, Digital Humanities, seems to feel the threat |

woensdag 7 december 2011

Are heritage institutions 'living the digital shift'? #DISH2011, 1

The conference covers many angles of the digital shift, but obviously I will be on the lookout for sessions and papers dealing with long-term access. Having that focus makes it easier to make choices at this conference, which boasts three blocks of no fewer than fifteen (!) simultaneous parallel sessions - which means you always miss 14/15th of what's on offer. That's a lot to miss, and somebody tweeted: I hope the three plenary keynote presentations make up for the 'sacrifice'.

|

| Amber Case, 'living the digital age', despite her admittedly 'analogue' upbringing. 'In my own back yard, I understood the limits of my mental and physical capabilities.' |

|

| Chair Chris Batt with a breakdown of the audience of more than 300 attendees, 75% (my estimate) from the Netherlands |

maandag 5 december 2011

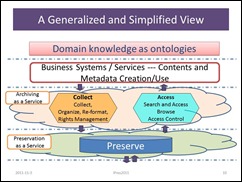

Een "infrastructuur": wat is dat en hoe bouw je het?

Hoe vlieg je zoiets groots aan? De ene methode is de deltaplanmethode: grootschalig, hoog-boven-over. In polderland Nederland zie je zoiets maar zelden. Dan moet het water ons écht aan de lippen staan, zoals in 1953 letterlijk gebeurde. Voor de duurzame bruikbaarheid van onze digitale bestanden is zo een beweging (nog) niet tot stand gekomen. Wij (zeg maar, informatieprofessionals) weten wel van de tijdbom die onder onze digitale informatie tikt, maar die urgentie wordt nog lang niet door alle bestuurders als dringend ervaren.

- Opslag (het betrouwbaar en vooral zo efficiënt mogelijk opslaan van de bits en de bytes, inclusief netwerkverbindingen)

- Preservering (wat moeten we nu precies doen om die duurzaamheid te waarborgen - monitoren van de ontwikkelingen (preservation watch), plannen van preserveringsacties, de softwaretools die je daarvoor nodig hebt, R&D, en vooral veel kennis)

- Afstemming collectiebeleid (digitale informatie laat zich lastig vangen in de traditionele taakverdeling tussen instellingen, daar moet je nieuwe afspraken over maken)

- Kwaliteitszorg en certificering (wanneer is een archief een 'trustworthy digital repository'? Hoe bewijs je dat?)

|

Aan mij de eer om al dit werk te ondersteunen vanuit de NCDD, en ik kan je zeggen, de werkgroepen hebben het niet gemakkelijk. Duurzame toegankelijkheid is een jong vak met heel veel onzekerheden. Wie kan voorspellen wat voor computers we over 10 of 20 jaar zullen hebben? Wie durft te voorspellen hoe snel het web blijft groeien? Wie durft te selecteren wat we wel en niet moeten bewaren? Wie durft er vandaag definitief te zeggen wat de beste duurzaamheidsstrategie is? En hoe zit het met alle bestuurlijke en juridische complicaties?

Ga er maar aan staan. Niettemin zijn we vol goede moed aan het werk gegaan. Er wordt hersenkrakend nagedacht en geschreven. Ideeën worden geopperd en soms weer van tafel geveegd. Om soms later opnieuw op te duiken als andere alternatieven niet haalbaar zijn gebleken.

Maar we hebben hulp nodig. Van jullie. Daarom organiseren we:

NCDD symposium "Bouw een huis voor ons digitaal geheugen",

24 januari 2012, KB, Den Haag, 10.30 u tot 16.30 u, toegang gratis, wél even aanmelden

Programma en aanmelden op http://ncddsymposium.eventbrite.com.

woensdag 30 november 2011

Digital preservation basics in four online seminars

If you are new to digital preservation, you may want to check out four ‘webinars’ organized by the California State Library and the California Preservation Program. The one-hour webinars promise to give you a basic understanding of what digital preservation is all about, of interest especially to librarians and archivists who are involved in developing digital projects.

The first webinar is scheduled for December 8, 12 PM Pacific time (which is 21.00 hrs in Holland). Topics include: ‘storing digital objects, choosing and understanding risks in file formats, planning for migration and emulation, and the roles of metadata in digital preservation.’ See http://infopeople.org/training/digital-preservation-fundamentals.

woensdag 23 november 2011

‘Mind the Gap’ and Archive-it – on web archiving (iPRES2011, 9)

At a reception the other day, I heard a rumour. Because preserving web sites is so difficult, the Internet Archive was rumoured to consider printing all of its content. I will not disclose the informant’s name – he would not have a future in the digital library where he works (OK, it was a guy, a young guy, and he works for a Dutch library.) Needless to say, it could not even be done if the Internet Archive wanted to do it. Lori Donovan told the iPRES audience that a single snapshot of the www nowadays results in 3 billion pages [for the Dutch: 3 miljard pagina’s].

Mind-boggling numbers, especially if you think of the Internet Archive’s shoestring budget.

Anyway, iPRES2011 is over, but I still have some worthwhile stories waiting to be told. One of the issues tabled at iPRES was whether we can (and/or should) safely leave web archiving to the Internet Archive and national libraries.

Logistics put the Internet panel members much further apart than their viewpoints would warrant: they agreed that web archiving is important, not just for national libraries. From the left: Geoff Harder, University of Alberta, Tessa Fellon, University of Columbia, and Lori Donovan, the Internet Archive.

No, said Geoff Harder of the University of Alberta and Tessa Fellon of the University of Columbia. There are compelling reasons for research libraries to get involved as well. Harder: “This is just another tool in collection building; we should not treat it any differently. You begin with a collection policy and an expanded view of what constitutes a research collection: build on existing collections; find collections where research is happening or will happen.”

I would say that perhaps there are even more compelling reasons to collect web content than, e.g., printed books, because web content is extremely fleeting. Harder told his audience: “Too much online (western) Canadian is disappearing; this creates a research gap for future scholars and a hole in our collective memory.” He encouraged research libraries to: “Mind the Gap – Own the Problem”.

The University of Alberta’s involvement in web archiving started with a rescue operation: a non-profit foundation which created some 80+ websites, including the Alberta Online Encyclopedia, went out of business. This was extremely valuable content, and it needed to be rescued fast.

When a time bomb is ticking …

The University of Alberta decided to use Archive-It, a service developed by the Internet Archive. It is a light-weight tool that is easy to get up and running immediately. Plus, said Harder, there is a well-established tool-kit including dashboard and workflows, you become part of an instant community of users and your collection becomes part of a larger, global web archive. Because that is a precondition for working with Archive-It: by default, everything that is harvested becomes publicly available globally. Harder: ‘It is an economical tool for saving orphaned and at-risk web content … where we know a time bomb is ticking.”

Have a look at the collections built with Archive-It, I would say to research libraries’ subject specialists. You can include anything that is interesting in your field, such as important blogs, for as long as they are relevant.

Yunhyong Kim of HATII, Glasgow, takes blogging very serious and is doing research into the dynamics of the blogosphere.

Q&A

Is Archive-It durable enough? asked Yunhyong Kim of Glasgow (HATII). Donovan appeared confident that Internet Archive would be able to continue developing the tool. And I would repeat Harder: when a time bomb is ticking, you have got to go with what is available.

What about preventing redundancy, was another question. Should we not keep a register somewhere of what is being archived? Fellon thought that was a good idea, but perhaps it was too early for that. 'There are many different reasons for web archiving, different frequencies.” Sorting out what overlaps exactly and what does not is perhaps more work than just accepting some “collateral damage”.

If you want to know more about Archive-It, you can sign up for one of their live online demos. There’s one scheduled for November 29 and one for December 6. See the website

maandag 21 november 2011

PDF/A-2: what it is, what it can do, what it cannot do, and what to expect in the future

There is a new PDF ISO standard, 19005-2, or PDF/A-2, and therefore the Benelux PDF/A Competence Center decided to organize a seminar. When one of the organizers, Dominique Hermans of DO Consultancy, asked me to do the warming-up presentation, I readily agreed, because I had been hearing some bad things about PDF these last few months, and was eager to find out more. While preparing my own talk (slides at the end of this post) I decided to quote those very criticisms (see LIBER2011 blog post), just to get the ball rolling and challenge the experts to comment:

This slide of mine is a mash-up of three slides by Alma Swan at the LIBER 2011 conference, Open Access, repositories and H.G. Wells

These criticisms come from people who want machines to analyse large quantities of data in a semantic-web/Linked Data-type environment. Are the criticisms justified? For those of you who, like me, are sometimes confused about what is and what is not possible, I will summarize what the experts told the seminar.

The key one-liner came from Carsten Heinemann of LuraTech:

“PDF was designed as electronic paper”

‘It was designed to reproduce a visual image across different platforms (PC, Mac, operating systems), and for a limited period of time.’ As such, PDF was a really good product, because it was compact and complete and it allowed for random access. But there were also many issues, and Adobe has been working on fixing those ever since. This has resulted in an entire family of PDF formats with different functionalities.

PDF/A is the file format most suited for archiving purposes. The new standard, PDF/A-2 is not a new version of PDF/A-1 in the sense that one would need to migrate from 1 to 2, but rather a new member of the PDF family tree that has improved functionality over PDF/A-1. In order words: migrating from PDF/A-1 to PDF/A-2 is senseless, but if you are creating new PDF documents you may want to consider PDF/A-2 because of the new functionality to incorporate more features from the original document (e.g., JPEG2000 compression, possibility to embed one file into another, larger page sizes, support for transparency effects and layers).

To make matters more complicated, PDF-A/2 comes in two varieties. Compliance level 2a and compliance level 2b. Level a allows for more access by search engines such as used in semantic web techniques, because it requires that files do not only provide a visual image, but that they are structured and tagged and include Unicode character maps.

Heiermann concluded: XML is for transporting data; PDF is for transporting visual representations. To which I may add: XML is for use by machines, PDF is for use by humans.

Misuse of PDF is easy

Raph de Rooij of Logius (Ministry of the Interior) told his audience that one should not be too quick to say that something is “impossible” with PDF. A lot is possible, but you have to use the tools the right way – and that is where things often go wrong.

Raph demonstrated that most PDFs put online by government agencies do not meet the government’s own requirements for web usability – including access by those who are, e.g., visually impaired. “The many nuances of the PDF discussion often get lost in translation,” he said. The trick is to pay a lot of attention to organizing the work flow that ends in PDFs.

PDF is no silver bullet

Ingmar Koch, a well-known (blogging) Dutch public records inspector, has seen many examples of PDF misuse. “Public officials tend to think of PDF as a silver bullet that solves all of their archiving problems”. But PDF was never designed to include anything that is not static (excel sheets with formulas, movies, interactive communications, etc.).

From the left: Caroline vd Meulen, Ingmar Koch, Bas from Krimpen a/d IJssel and Robert Gillesse of the DEN Foundation.

From a preservation point of view, I heard some shocking case studies from public offices. An official will type the minutes of a council meeting in Word, make a print-out, have the print-out signed physically, then OCR the document and convert it to PDF for archiving. I dare not imagine how much information gets lost in the process. But then again, we all know that data producers’ interests are often different from archives’ interests. Public offices just want to make a “quick PDF” and not be bothered by all the nuances.

How about validation?

There is a lot of talk about “validating” PDF documents. First of all, PDFs are created by all sorts of software, and what they produce often does not conform to the ISO standards and is thus rejected by validators. Things get more confusing when validators turn out different verdicts. Heinemann explained: “That’s because some validators only check 30%, whereas some will check 80%. The latter may find something the first did not see.”

At the end of the day …

It seems that, indeed, there are millions and millions of PDFs out there that can only provide a visual representation and are no good when it comes to Linked Data and the Semantic Web. But PDF is catching up, including new features all of the time. I understand that we may even expect a PDF/A-3, which supports including the original file format in the digital object. Ingmar Koch did not seem to be too happy about such functionality. It would make his life as a public records inspector even harder. But from a preservation point of view, that just might be as close to a silver bullet for archiving as we will ever get.

Meanwhile, if you want to use PDF in your workflow, getting some advice from an expert about what type of PDF is appropriate in your case is called for!

Comments by Adobe

Adobe itself was very quick to respond to this blog post in an e-mail I found this morning. Leonard Rosenthol, PDF Architect, was not very pleased with the picture painted by the above workshop – as a matter of fact, he used the word “appalled”. He asserted that PDF and XML/Linked Data go very well together and that various countries and government agencies have already adopted a scenario that ‘presents a best of two worlds’. Here is his link to a recent blog post by James C. King that describes how it is done: <http://blogs.adobe.com/insidepdf/2011/10/my-pdf-hammer-revision.html.

That blog post is an interesting addition to the workshop results (confirming Raph de Rooy’s assertion that “nothing is impossible”), but it does not take away the fact that PDF is often misused. I would guess that is because it is complicated stuff. “Making a quick PDF” just does not do it. The recommendation to seek expert advice, therefore, stands!

Lastly, here is my own presentation: a broad overview of developments in the digital information arena to start off the day – in Dutch:

For the Dutch fans: Ingmar Koch has blogged about this event here, and the slides will become available here. Thanks also to KB colleague Wouter Kool for helping me understand PDF.

zondag 20 november 2011

‘Bewaar als …’: glashelder advies over digitaal archiveren

Karin van der Heiden heeft met Premsela (Nederlands Instituut voor Design en Mode) een glasheldere leporello (uitvouwbrochure) ontwikkeld om vormgevers praktische handvatten te bieden om hun informatie goed te ordenen en goed op te slaan – en dat is het begin van alle langetermijntoegang. Niet alleen belangrijk voor vormgevers, maar voor iedereen die digitale documenten maakt en die goed wil bewaren!

Gefeliciteerd, Karin, met deze productie!

Kijk op de bijbehorende website, http://bewaarals.nl/, en zegt het voort!

PS: Hieronder de hele vellen, in .jpeg.

An English edition will be made available in the US in a few months. I will keep you posted.

dinsdag 8 november 2011

Aligning with most of the world (iPRES2011, 8)

iPRES is organized alternately in Europe, in North-America and in Asia in order to include people and discussions from all continents – Africa and South-America are still on the Steering Committee’s wish list. However, when you looked at the list of presenters at iPRES2011, it was the usual suspects that dominated: Europe, North America, Australia/New Zealand. I asked a Programme Committee member about that, and he told me that some papers had been submitted from Asia, but they were deemed not good enough to make it to the programme.

iPRES is organized alternately in Europe, in North-America and in Asia in order to include people and discussions from all continents – Africa and South-America are still on the Steering Committee’s wish list. However, when you looked at the list of presenters at iPRES2011, it was the usual suspects that dominated: Europe, North America, Australia/New Zealand. I asked a Programme Committee member about that, and he told me that some papers had been submitted from Asia, but they were deemed not good enough to make it to the programme.

To my mind, there is a bit of a contradiction in this. Of course we want high-quality papers at iPRES, but it is a bit risky to take our (western) stage of development as a yard stick for what constitutes “quality”. As Cal Lee phrased it: “Digital preservation tends to be quite regionally myopic.” I would suggest that the next iPRES organize a special track or workshop day for those that are just beginning to think about digital preservation, or that work from a very different context than a “western” one and focus on their specific circumstances and challenges.

Fortunately, there was one workshop that expressly invited members from “other” countries. It was the workshop “Aligning national approaches to digital preservation”, a follow-up from last May’s Tallinn conference (see my blog posts), put together by Cal Lee from the University of North Carolina. Yes, there were usual suspects presenting as well (including yours truly), but in this post I shall mostly ignore them in favour of new input:

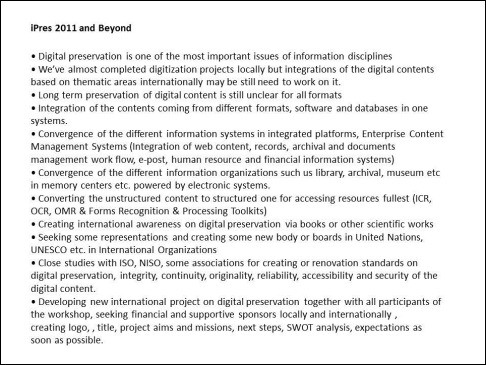

Özgür Külcü, from Hacettepe University, Ankara, Turkey, described Turkish participation in the AccessIT project, whereby an online education module with practical information about digitisation issues and protection of cultural heritage was developed. And in the context of the InterPares 3 project the Turkish team is helping translate digital preservation theory into concrete action plans for organizations with limited resources. But many issues remain:

Özgür Külcü, from Hacettepe University, Ankara, Turkey, described Turkish participation in the AccessIT project, whereby an online education module with practical information about digitisation issues and protection of cultural heritage was developed. And in the context of the InterPares 3 project the Turkish team is helping translate digital preservation theory into concrete action plans for organizations with limited resources. But many issues remain:

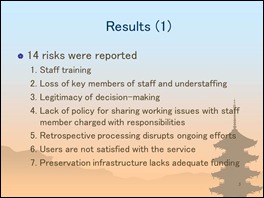

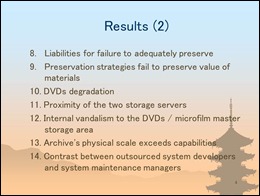

Masaki Shibata from Japan, revealed the results of a DRAMBORA 2.0 test audit carried out at the National Diet Library in Japan:

Shibata admitted that, unfortunately, the risks mentioned in the final report largely remain unsolved. ‘We were caught up in an illusion that there was an ideal solution to ensure long-term digital preservation,’ he said. ‘We tried to address the risks only by means of systems development.’ Also, specific Japanese and NDL circumstances played a role, such as the rigidness of the fiscal, budget, employment and personnel system; language difficulties and geographical constraints; lack of digital conservators; and a cultural context of preservation. Shibata concluded that an international alliance for digital preservation ‘would become a boost/tailwind for national policymaking in Japan.’

Shibata admitted that, unfortunately, the risks mentioned in the final report largely remain unsolved. ‘We were caught up in an illusion that there was an ideal solution to ensure long-term digital preservation,’ he said. ‘We tried to address the risks only by means of systems development.’ Also, specific Japanese and NDL circumstances played a role, such as the rigidness of the fiscal, budget, employment and personnel system; language difficulties and geographical constraints; lack of digital conservators; and a cultural context of preservation. Shibata concluded that an international alliance for digital preservation ‘would become a boost/tailwind for national policymaking in Japan.’

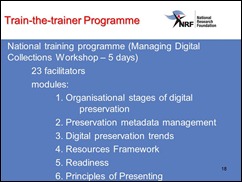

Daisy Selematsela from the National Research Foundation of the Republic of South Africa, described the outcomes of An audit of South African digitisation initiatives before focussing on “Managing Digital Collections: a collaborative initiative on the South African Framework”, a report published earlier this year, which is meant to provide data producers with high-level principles for managing data throughout the digital collection life cycle; and the Train-the-trainer programme:

Daisy Selematsela from the National Research Foundation of the Republic of South Africa, described the outcomes of An audit of South African digitisation initiatives before focussing on “Managing Digital Collections: a collaborative initiative on the South African Framework”, a report published earlier this year, which is meant to provide data producers with high-level principles for managing data throughout the digital collection life cycle; and the Train-the-trainer programme:

As for international alignment, Selematsela concluded:

Raju Buddharaju of the National Library of Singapore (photo right) suggested that we first need a better understanding of what we mean “alignment” and what we mean by digital preservation (what do we include, what do we exclude) before we can try and come to workable initiatives.

Raju Buddharaju of the National Library of Singapore (photo right) suggested that we first need a better understanding of what we mean “alignment” and what we mean by digital preservation (what do we include, what do we exclude) before we can try and come to workable initiatives.

The workshop was originally designed as a one-day event, but in the end the conference organizers only gave us 3 hours on Friday afternoon. The good news was that despite the time of day and conference fatigue, more than fourty participants showed up and they conducted animated discussions on such topics as: costs; public policy and society; and preservation & access.

But it was difficult to reach any concrete conclusions. There are many good intentions, but it continues to be difficult to find the common ground that leads to practical results. Steve Knight of the National Library of New Zealand (photo left) questioned whether there is any real will to collaborate, e.g., on putting together a much-needed international format (technical) registry. Talking about education, finally, Andi Rauber suggested that because there is no well-defined body of knowledge, we might prefer a range of “friendly competing curricula” rather than an aligned body – for the time being.

But it was difficult to reach any concrete conclusions. There are many good intentions, but it continues to be difficult to find the common ground that leads to practical results. Steve Knight of the National Library of New Zealand (photo left) questioned whether there is any real will to collaborate, e.g., on putting together a much-needed international format (technical) registry. Talking about education, finally, Andi Rauber suggested that because there is no well-defined body of knowledge, we might prefer a range of “friendly competing curricula” rather than an aligned body – for the time being.

Which only goes to show that, like Singapore itself, alignment comes in many shapes and sizes.

Disaster planning and enabling smaller institutions (iPRES2011, 7)

As this iPRES was moved from Tsukuba, Japan, to Singapore because of the earthquake and tsunami in Japan in March this year, it was only fitting that iPRES2011 should include a panel session on disaster planning. Neil Grindley (JISC) asked if digital preservation does not implicitly include disaster planning, but Angela Dappart (DPC) argued that with an entire infrastructure going down, the problems will be massively larger. Plus, as Arif Shaon (STFC) observed, ‘Grade A preservation should include it, but we have not reached that stage yet.’

Shigeo Sugimoto of Tsukuba, who would have been iPRES’s host in Japan, took a forward-looking view at disaster planning. Many physical artefacts were lost during the earthquake, and having lots of digital copies at different locations can certainly help rescue cultural heritage, provided the metadata are kept at different locations as well.

Shigeo Sugimoto (right) with José Barateiro of Portugal during the disaster planning session.

Shigeo Sugimoto (right) with José Barateiro of Portugal during the disaster planning session.

There is one catch, though: many smaller institutions do not have the means (money, staff) to build digital archives. Therefore, in Japan the idea has been put forward to design a robust and easy-to-use cloud-based service for small institutions:

In the Netherlands, I am involved in two Dutch Digital Preservation Coalition (NCDD) working groups who are looking at the same problems: how to enable smaller institutions to preserve their digital objects. Professor Sugimoto and I have agreed to stay in touch and exchange information and experiences.

zondag 6 november 2011

‘At scale, storage is the dominant hardware cost’ (iPRES2011, 6)

It is not uncommon for conferences to be ‘interrupted’ by sponsor presentations. When I say ‘interrupted’, I do not necessarily mean that such talks are unwelcome. Conference days tend to be packed from early morning to late at night, and such sponsor interventions can be quite pleasant – a moment to doze off or to check your e-mail. Robert Sharpe (photo) of Tessella (vendors of the Safety Deposit Box or SDB system) gave us no such respite. In an entertaining presentation he shared some scalability experiences with us.

It is not uncommon for conferences to be ‘interrupted’ by sponsor presentations. When I say ‘interrupted’, I do not necessarily mean that such talks are unwelcome. Conference days tend to be packed from early morning to late at night, and such sponsor interventions can be quite pleasant – a moment to doze off or to check your e-mail. Robert Sharpe (photo) of Tessella (vendors of the Safety Deposit Box or SDB system) gave us no such respite. In an entertaining presentation he shared some scalability experiences with us.

The case study was Family Search, which ingests no less than 20 Terabyte of images a day. That was quite a scalability test for the Tessella Safety Deposit Box system, and it tested some of Sharpe’s own assumptions:

- Tessella expected that they would need faster, more efficient tools, but it turned out that existing tools (DROID, Jhove, etc.) were easily fast enough.

- Tessella expected reading and writing of content to be fast compared to processing, but it turned out that reading and writing were not fast enough; the process required parallel reads and parallel writes. Thus the hardware cost is dominated by non-processing costs.

- Tessella (and most of us) expected storage to be cheap, but at scale it turned out to be the dominant hardware cost. Reading and writing hardware came to about GBP 80,000. The storage costs came to GBP 100 per Terabyte content (3 copies), which amounted to GBP 730,000 a year, each year, and without refreshment costs.

Sharpe concluded that we do not need faster tools – but we do need better & more comprehensive tools. We need systems engineering, not just software engineering. And we need enterprise solutions: automation, multi-threading, efficient workflow management and automated issue handling.

All of which, of course, Rob will be happy to talk to you about.

PS: In response to this blog post, Rob wrote to me: ‘A further point I was trying to make in the rest of my talk is you don't need especially powerful application servers to do this: you can do it fairly cheaply (certainly when compared to other costs at such scale).’

Scale Singapore-style: the Marine Bay Sands Hotel. The ship-like contraption on top of the three towers holds lush tropical gardens, a 150 meter swimming pool, restaurants, and a bar.