Hieronder de eerste impressies die ik tijdens de Curating Research conferentie van gisteren in Den Haag opdeed en als 'wrap-up' aan het slot presenteerde; voor één keer in het Engels. Zie ook de blog hiervoor.

First impressions as I presented them yesterday to the conference Curating Research (The Hague, 17 April 2009) during the final session.

A long, long time ago … that is: early this mornin g, the organisers started us out on a very ambitious agenda, I quote Hans Jansen (opening speaker, KB): ‘At the end of the day you will be able to assess the preconditions for implementing long-term preservation in your own organisation – both in terms of policy, technical infrastructure and organisational development.’

g, the organisers started us out on a very ambitious agenda, I quote Hans Jansen (opening speaker, KB): ‘At the end of the day you will be able to assess the preconditions for implementing long-term preservation in your own organisation – both in terms of policy, technical infrastructure and organisational development.’

So, I ask you, audience: ARE YOU?

[as the room remains quite silent ...]

Perhaps a few highlights will help you answer that question.

Eileen Fenton (Portico, a US digital archive) painted a very clear general picture of the digital curation landscape: digital information is exploding; we need to manage that information to safekeep it for future generations. And preservation is only a means to the all important end of access.

Eileen Fenton (Portico, a US digital archive) painted a very clear general picture of the digital curation landscape: digital information is exploding; we need to manage that information to safekeep it for future generations. And preservation is only a means to the all important end of access.

How do we do it? Well, permanent access does not happen by accident. It takes work, work we still have many uncertainties about, as this is a new field. One thing Eileen told us right away: one size does not fit all, this game is complex.

Eileen had some notable advice for librarians: befriend selection, and get close to the creators of the content. They make critical decisions when it comes to keeping research information accessible in the long term.

Her last, and I think very important point: ‘do not go at this game alone’. Find yourself trusted partners to work with, nationally or internationally.

Jeffrey van der Hoeven and Tom Kuipers (KB) presented their PARSE.insight project which surveyed how the LIBER libraries deal with digital preservation. To my mind an important outcome of their survey was the low response rate: out of 400 institutions, only 59 completed the questionnaire. What does this say about the current position of research libraries? They are to some degree aware of the issues, but are hesitant to get involved. Perhaps because the issues are too daunting? Or because others should take on the task?

Jeffrey van der Hoeven and Tom Kuipers (KB) presented their PARSE.insight project which surveyed how the LIBER libraries deal with digital preservation. To my mind an important outcome of their survey was the low response rate: out of 400 institutions, only 59 completed the questionnaire. What does this say about the current position of research libraries? They are to some degree aware of the issues, but are hesitant to get involved. Perhaps because the issues are too daunting? Or because others should take on the task?

Dale Peters (Göttingen, DRIVER project) reviewed the many European research projects which are under way to tackle the more technical aspects of digital preservation. Although these do assure research libraries that many technical issues are being dealt with on an international scale, I must admit that the quantity and variety of acronyms in this field sometimes overwhelms me. Fortunately, all of them have websites to which you can refer for more detailed information. And if you cannot find your way, send an e-mail to Dale and she will no doubt help you along.

Dale stressed two important points:

a) that we need to do work on linking all the digital information that is out there to serve our clients. I am sure nobody in the room disagrees with that!

b) Also, Dale mentioned – almost in passing – that of course not every repository must by definition have long-term preservation facilities. She agreed with Eileen Fenton that trusted third-party services are not only an acceptable but often an essential part of the digital preservation equation.

Maria Heijne (TU Delft Library, 3TU Datacentre) agreed with Hans Jansen that securing long-term access to research data and publications is core business for libraries. In her view, libraries have no choice but to engage in data management. She rhetorically asked her audience: who else could do it? It is libraries that have the experience needed, they just need to give their services a digital twist.

Maria Heijne (TU Delft Library, 3TU Datacentre) agreed with Hans Jansen that securing long-term access to research data and publications is core business for libraries. In her view, libraries have no choice but to engage in data management. She rhetorically asked her audience: who else could do it? It is libraries that have the experience needed, they just need to give their services a digital twist.

This digital twist – as also stressed by Eileen Fenton – involves working very closely together with the research communities themselves. They all have very distinct workflows and metadata schemes which are also very different from libraries’ traditional schemes, so both sides must do a lot of adapting. Although it is early days yet, I think the 3TU.datacentre is really developing into a best practice of research libraries’ involvement with data curation. 3TU do exciting work in developing an entirely new relationship with the research community to create a win-win-situation for researchers and research libraries: better quality data during the research process, which then flows into the digital archive with very little additional effort. Be sure to have another look at her powerpoint presentation when we publish it on our website for more details. And perhaps we can write an article about it in LIBER Quarterly, Maria?

I was very sorry that I could not be in two places this afternoon. Of course I had fellow rapporteurs in the workshops I could not attend, but there was too little time to integrate their notes here. We will, of course, provide a full account in the next issue of LIBER Quarterly.

Here are my own notes from two of the four workshops (with apologies to the other workshop hosts who no doubt had much to say as well):

National and international roles

This session was led by Keith Jeffery (STFC, UK, and chairman of the Alliance for Permanent Access) and Peter Wittenburg (Max Planck Institute of Linguistics, Nijmegen). They focussed their attention on research itself; what elements of the research life cycle should in fact be preserved, and who is responsible for preserving them? This is a monumental question, especially as the researchers in our group kept stressing how complicated research data are. Only the publication is static, everything else is dynamic and thus difficult to preserve.

Some doubts were raised as to whether libraries are in fact best suited for the job of preserving the manifold elements of the research life cycle. Libraries’ work flows and metadata schemes, it was suggested, are perhaps too ‘library-centric’ to serve the research community properly.

So should perhaps the management of live data, including providing access, be separated from the archiving functions? And, more importantly, should communities themselves take care of curation rather than libraries? Krystyna Marek from the European Commission explained that the e-infrastructure vision of the EU is in fact focussing on the research communities themselves.

I should not forget to mention that Hans Geleijnse of LIBER suggested that we draw up 5 or 10 golden rules of digital curation, to help the community along. UNESCO drew up such guidelines in 1996, but they need modernising and updating. Half the attendees of this workshop volunteered on the spot to help bring this about, which I thought was very impressive.

Problems, preconditions and costs: opportunities and pitfalls

Neil Beagrie (Charles Beagrie Ltd.) took his cue from David Rosenthal, who recently held a controversial presentation at CNI, saying that our real problems now are not about media and hardware obsolescence, as predicted by Jeff Rothenburg in his famous 1995 article, but rather about scale and cost and intellectual property. ‘Bytes are vulnerable to money supply glitches,’ is a memorable quote, especially in these credit crunch times.

Neil Beagrie (Charles Beagrie Ltd.) took his cue from David Rosenthal, who recently held a controversial presentation at CNI, saying that our real problems now are not about media and hardware obsolescence, as predicted by Jeff Rothenburg in his famous 1995 article, but rather about scale and cost and intellectual property. ‘Bytes are vulnerable to money supply glitches,’ is a memorable quote, especially in these credit crunch times.

So, what does digital preservation cost? Marcel Ras shared his experiences with the KB e-Depot which now archives about 13 million journal articles, thereby providing a sound base for archiving the published output of research. Between now and 2012, however, the size of the e-Depot will grow expotentially, as the e-Depot will incorporate digitised masters and websites. Yet the cost is expected to remain more or less stable at 6 million euro’s a year, which includes 14 full-time staff.

So, what does digital preservation cost? Marcel Ras shared his experiences with the KB e-Depot which now archives about 13 million journal articles, thereby providing a sound base for archiving the published output of research. Between now and 2012, however, the size of the e-Depot will grow expotentially, as the e-Depot will incorporate digitised masters and websites. Yet the cost is expected to remain more or less stable at 6 million euro’s a year, which includes 14 full-time staff.

What does this say about possible costs for research libraries? Neil Beagrie investigated the costs of preserving research data at higher education institutions in the UK; the report 'Keeping Research Data Safe' is on the JISC website. Notable findings are that preserving research data is much more expensive than preserving publications. Also, as predicted earlier this morning, timing is a crucial factor. Good care at creation saves a lot of money in the long run.

Another finding: scale matters. Start-up costs are high, but adding content to existing infrastructures is relatively cheap. The Archaeological Data Service estimates that overall costs tail off substantially anyway with time and scale. This is important for our thinking about funding models and up-front (endowment) payment.

Neil Beagrie concluded his presentation with the observation that when it comes to defining a policy for digital preservation, many higher education institutions still have a long way to go and the same seems to hold true for research libraries.

As a co-organiser of this workshop I would not dare presume that we have answered all your questions, but I do hope that this day has helped you a little further along this no doubt complicated, but also very exciting road.

(The powerpoint presentations will be published next week at http://www.kb.nl/curatingresearch; a full report will appear in the next issue of LIBER Quarterly at http://liber.library.uu.nl/, the current issue of which is devoted entirely to digital preservation issues.)

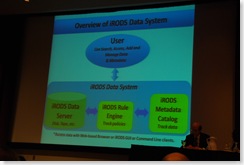

Photographs, top to bottom (IA): Hans Jansen, KB; Eileen Fenton, Portico; Jeffrey van der Hoeven, KB (ducking behind him his PARSE teammate Tom Kuipers); Maria Heijne, TU Delft Library; Neil Beagrie, Charles Beagrie Ltd., Marcel Ras, KB.

Zo mooi lag de Brusselse Grand Place erbij afgelopen vrijdag: strakblauwe lucht, onwaarschijnlijk mooie - duurzaam bewaarde! - barokke gevels, volle terrassen en de geur van Vlaamse frieten en Brusselse wafels.

Zo mooi lag de Brusselse Grand Place erbij afgelopen vrijdag: strakblauwe lucht, onwaarschijnlijk mooie - duurzaam bewaarde! - barokke gevels, volle terrassen en de geur van Vlaamse frieten en Brusselse wafels.